"Towards Deep Learning Models Resistant to Adversarial Attacks" - A review

Adversarial examples

Nowadays, deep neural networks (DNN) play a central role in lot of security-critical systems such as facial recognition and autonomous driving. However, recent research has shown that such DNN models can be fooled by adversarial examples. An adversarial example is an input which has been tampered in a way such that a DNN classifies it incorrectly.

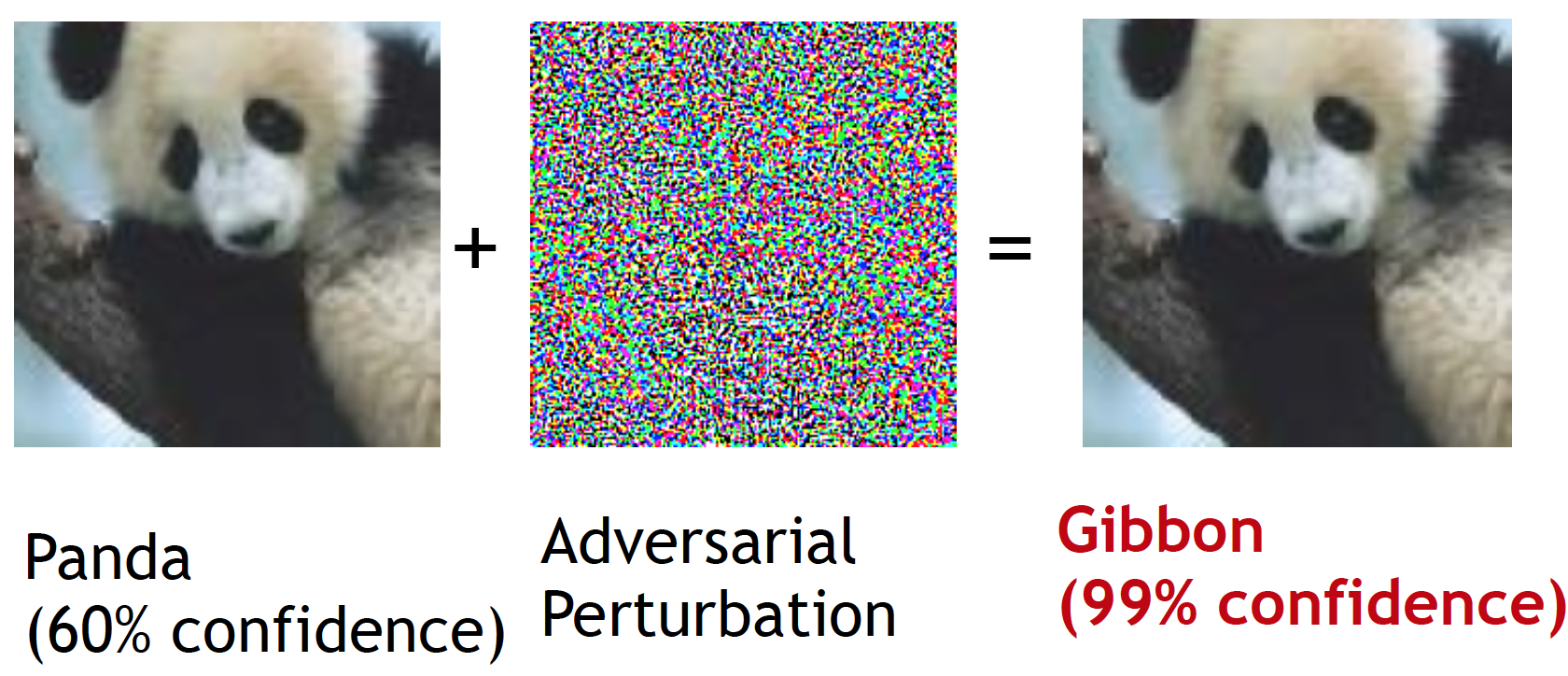

This popular example shows how a DNN (in this case, GoogLeNet) can be misled by adding an adversarial perturbation. Despite no difference is visible to human eye, the DNN confidently classifies the updated image as a 'gibbon'.

An adversarial example. (Image source: Goodfeellow et al.)

The excellent blog post by researchers at OpenAI provides a glimpse of real world AI safety issues which can be caused by adversarial attacks.

Generation of adversarial examples

Defenses against adversarial examples

Adversarial training

PGD

This approach leverages the min-max formulation proposed by Lyu et al., 2015 to incorporate adversarial perturbations into the loss function.

A standard classification problem can be expressed as finding parameters which minimizes a loss function (for example, cross-entropy) for a set of examples with corresponding labels . If we assume that the examples are sampled from a distribution , the optimization problem reduces to finding parameter which minimizes the risk . If we want our classifier to stay robust to an adversary which perturbs an example by , the loss function is updated to include this perturbation.

The min-max formulation, as evident from its name, consists of a minimization problem and a maximization problem. The inner maximization problem is tasked with adding a perturbation to the input x which achieves a higher loss. On the other hand, the goal of the outer minimization problem is to learn model parameters minimizing the loss which is being maximized by the inner maximization.

According to this definition, if the adversarial loss of a model is small for all adversarial examples , the model should be robust for all allowed adversarial attacks.

How to solve this min-max optimization problem?

Stochastic Gradient Descent (SGD) based backpropagation can not be directly applied as this optimization problem is non-convex. Lyu et al., obtained a closed form solution for worst-case by first approximating the inner maximization problem with Taylor series and then applying Lagrangian multiplier method. This simplifies the optimization problem to minimizing , which can be written as a regularization term with Taylor series. For -bounded attacks (commonly used type of attacks) this simplified formulation becomes identical to the fast gradient sign (FGSM) attack.